Agree with me: we use computers for literally everything. Ever since I was a little girl, I’ve been obsessed. Playing Tetris on what my dad calls a ‘256 MB dinosaur’ and making PowerPoint presentations on anything that caught my fancy because, hello, who didn’t love playing with slides? I did presentations about volcanos, clothes, etc. And oh, the hours spent on the Cartoon Network website or diving into the iconic online chat world of mipunto.com with my cousin—those were the days!

Since then, computers (or “computerized devices”) have become my lifeline. They keep me connected with my family and friends (super important when they’re miles away), let me binge-game, and so much more. Life without them? I can’t even imagine it; I don’t want to.

But let’s get real—computers aren’t perfect. Every few months, I’m drooling over the latest graphic card or eyeing the newest AI-powered smartphone (still figuring out what people believe AI really does). And then there’s the latest Super Mario—the same old good game, but the graphics? Unreal. The mustache guy looks more HQ than I remember. We’re always craving better performance, sharper graphics, faster simulations, and more speed from our tech.

Have you ever wondered what goes behind in making all this happen? Beyond just the cool new features, much work and science is involved. Scientists historically want to reduce the time needed to resolve “problems,” but this is more than an issue with time; it is a complexity issue. So the valid question is: How could I make my computer resolve more complex problems I couldn’t before? So yes, it’s all about tackling complex issues more ingeniously.

Complexity is about cracking new doors to solve puzzles we couldn’t even touch before. Imagine trying to solve a massive, intricate jigsaw puzzle. Earlier computers might only handle the corners and edges. Still, with advancements in tech, we can now tackle the whole picture, piece by detailed piece. It’s about giving our machines the brainpower to dive into more complex challenges and things that were once beyond our reach.

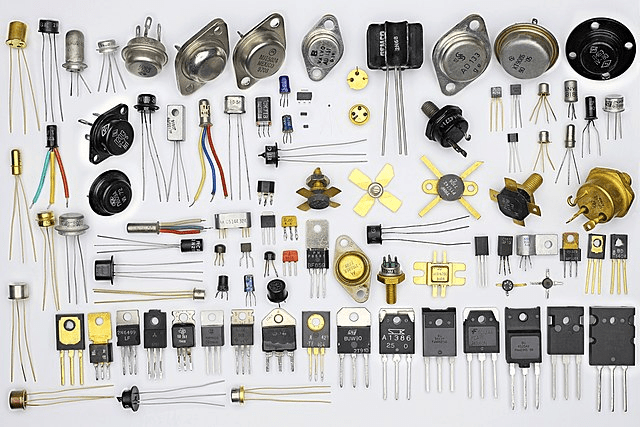

Even in our way of learning, we work a bit like that. The first time I saw a computer open, I felt like witnessing something unique from where all the magic came from. Even today, I think the same way when I clean my laptop. But now, I can recognize some parts in all that beautiful complexity, such as the motherboard, graphic card, and hard drive. Some of the magic happens in things I cannot easily see, such as the transistors.

The creation of transistors, tiny switches that power everything our gadgets do, from playing a video to sending a message, was a game-changer back in the day. As a clever guy named Moore (co-founder of Intel) pointed out, we’ve been increasing the number of these micro wonders every couple of years, making our devices smaller, faster, and cheaper.

This is Moore’s Law. This means our phones and laptops are getting faster and can do more cool stuff every few years without getting more expensive.

Remember the bulky phones from primary school? Damn, I remember my first phone; I could have killed another kid with one of those. Fast forward, and now we’ve devices that fit in the palm of our hands—or even on our wrists, hello smartwatches!—that do way more.

In my mundane daily life, I can continue entertaining myself without needing much. But even with all these advancements, there’s a catch. My old phone struggles with the newest apps, and my newer phone breezes through. And don’t get me started on my laptop gasping through data analysis with Python.

Or, to give you another example: Did you know that scientists had to transport the hard drives by plane for the first-ever image of a black hole? This was due to the massive amount of data collected from seven different radio telescopes worldwide, about five petabytes. The data was so large that it was faster to physically fly the hard drives to the processing facilities.

So, what’s next when we hit the limit with our current technology? Different technologies try to answer this question at the technological frontier. That’s where quantum computing enters the chat. Like how we went from bulky phones to sleek smartphones, scientists are now looking at a new way to make computers even more potent with quantum computing.

So far, right now, I have just talked to you about classical computing. And it is classical because we haven’t used [yet] the power that could come from quantum mechanics. Imagine switching up the basic building block of computer info from bits to quantum bits: qubits, offering a whole new level of computing power. This could be one key to breaking through those old limits.

We will talk more about it in the following entries. Are you excited to dive deeper into quantum computing in upcoming posts? Catch you later!

Leave a reply to Quantum computing perspectives (2025) – <Quantum|Chamitas> Cancel reply